Australian (and especially South Australian) electricity is expensive. That is probably part of the reason that SA is leading the world in residential rooftop solar.

But it is hard to negotiate the electricity retail market. Knowing your daily usage and feed-in enables you to determine which retailer you should choose, but only if you have the tools available to you to compare what your costs would have been with each retail plan.

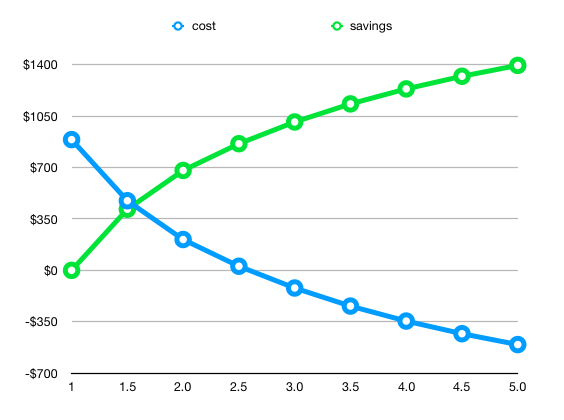

And this does not even begin to address the question of if it is cost-effective to increase the size of your PV generation system: most supply companies (who, as it turns out, are different entities to the retailers) have per-phase limits of 5kW: that is, you may never feed back into the grid more than 5kW at any point in time.

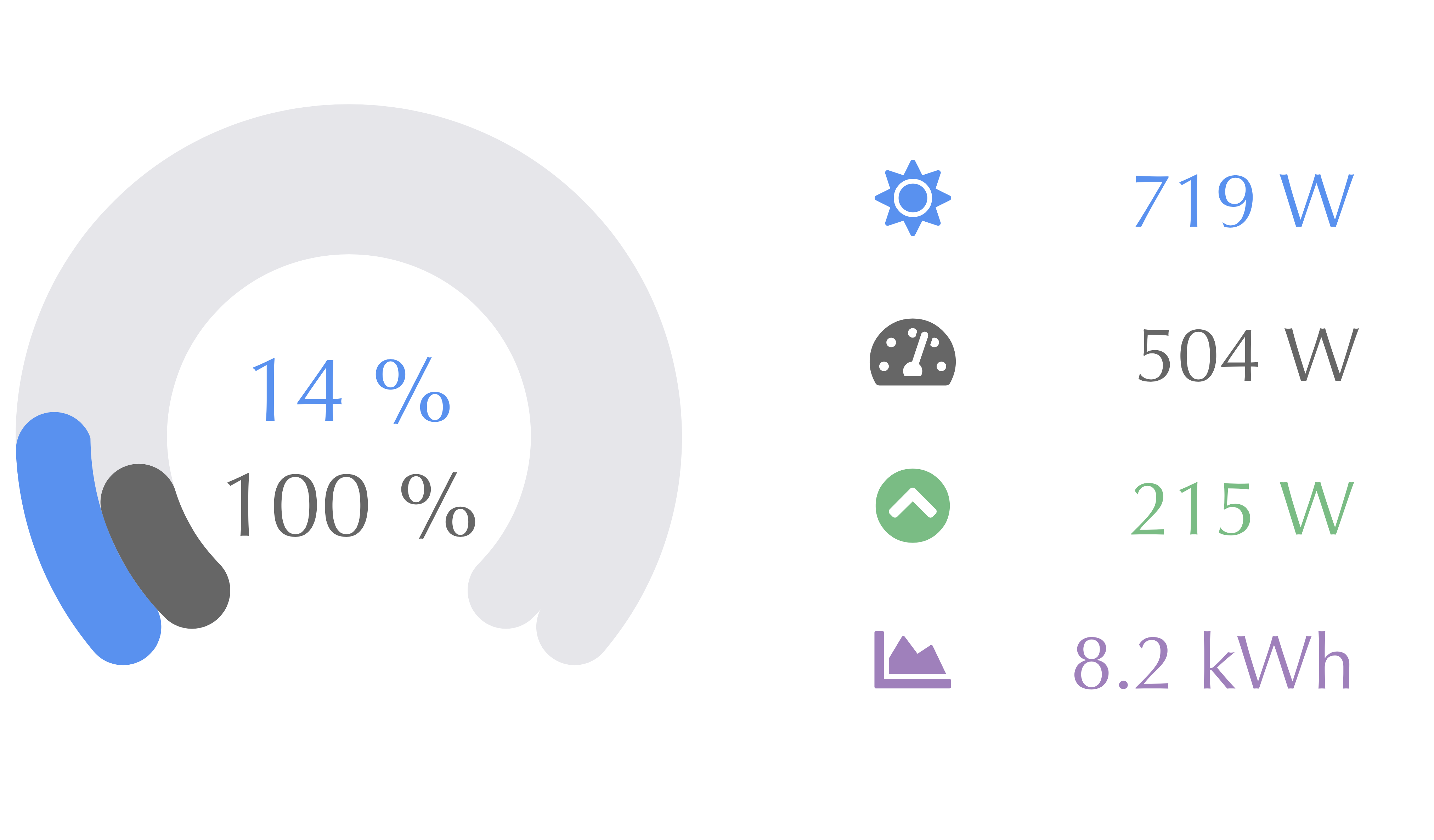

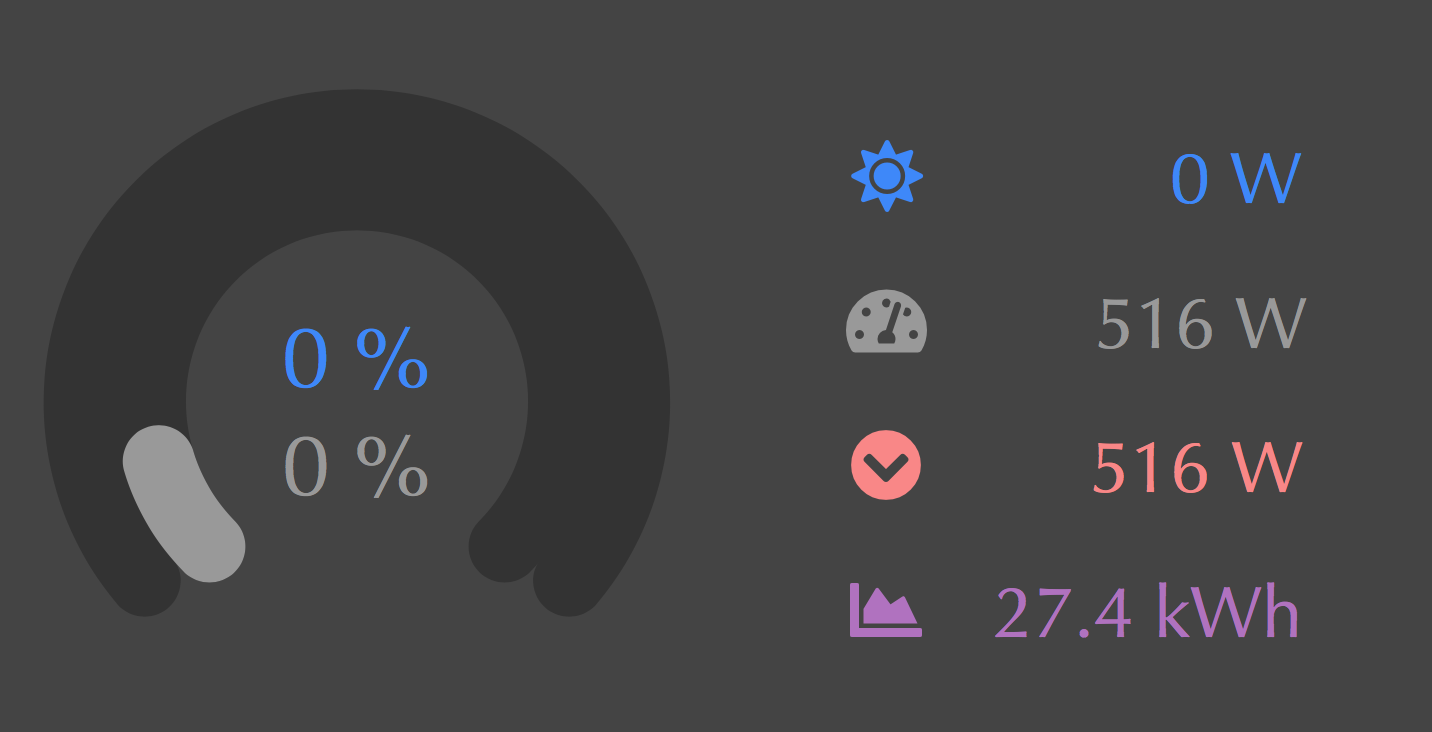

Since I had my Fronius Smart Meter installed, I have been able to run reports and get my daily import and export values: these are also available through my retailer, and even through SA Power Networks, the distributor.

I have my data stored in three tables:

REATE TABLE generation (

"timestamp" TIMESTAMP UNIQUE PRIMARY KEY,

"energy" FLOAT

);

CREATE TABLE import_cumulative (

"timestamp" TIMESTAMP UNIQUE PRIMARY KEY,

"energy" INTEGER

);

CREATE TABLE export_cumulative (

"timestamp" TIMESTAMP UNIQUE PRIMARY KEY,

"energy" INTEGER

);

This is really only necessary because I need to take the cumulative values in the import and export tables, and turn them into absolute values for that period:

CREATE OR REPLACE VIEW import AS (

SELECT timestamp,

energy - LAG(energy, 1) OVER (ORDER BY timestamp) AS energy

FROM import_cumulative

ORDER BY timestamp

);

CREATE OR REPLACE VIEW export AS (

SELECT timestamp,

energy - LAG(energy, 1) OVER (ORDER BY timestamp) AS energy

FROM export_cumulative

ORDER BY timestamp

);

Now, from these views, it is possible to do some modelling of what different retailers would cost, but they generally need stuff per-day, so we can create a view that combines them. Since I will be doing stuff later that also needs consumption and generation, I will also include them:

CREATE OR REPLACE VIEW consumption AS

SELECT timestamp,

import.energy + generation.energy - export.energy AS energy

FROM generation

INNER JOIN import USING (timestamp)

INNER JOIN export USING (timestamp);

And our summary (for each interval):

CREATE OR REPLACE VIEW summary AS (

SELECT timestamp,

generation.energy AS generation,

import.energy AS import,

export.energy AS export,

import.energy + generation.energy - export.energy AS consumption

FROM generation

INNER JOIN import USING (timestamp)

INNER JOIN export USING (timestamp)

);

Getting close to having some usable data there now. What about daily summaries?

CREATE OR REPLACE VIEW daily_summary AS (

SELECT timestamp::DATE AS date,

SUM(generation) AS generation,

SUM(import) AS import,

SUM(export) AS export,

SUM(consumption) AS consumption

FROM summary

GROUP BY timestamp::DATE

);

That gives us per-day summaries of our generation, import and export.

Here are some values from my system:

SELECT * FROM daily_summary LIMIT 10;

| date |

generation |

import |

export |

consumption |

| 2019-03-07 |

29851.915 |

7587 |

24191 |

13247.915 |

| 2019-03-08 |

14033.2047222222 |

7578 |

9156 |

12455.2047222222 |

| 2019-03-09 |

28074.8316666667 |

5746 |

20961 |

12859.8316666667 |

| 2019-03-10 |

15239.6936111111 |

7669 |

9144 |

13764.6936111111 |

| 2019-03-11 |

21101.1441666667 |

6528 |

12657 |

14972.1441666667 |

| 2019-03-12 |

28231.4280555556 |

5955 |

22382 |

11804.4280555556 |

| 2019-03-13 |

22880.3077777778 |

6040 |

17411 |

11509.3077777778 |

| 2019-03-14 |

30440.8319444444 |

6658 |

24791 |

12307.8319444444 |

| 2019-03-15 |

30141.3888888889 |

6576 |

26227 |

10490.3888888889 |

| 2019-03-16 |

29286.4413888889 |

7336 |

22224 |

14398.4413888889 |

(10 rows)

Right. So now we want to be able to look at costs for different retailers. There is a magnificent resource for getting this data: Energy Made Easy. The rates differ for different postcodes (WAT): I’m not sure if they are the same across a whole state or not. Probably not.

Anyway, it turns out that there are some retailers who have three different rates, depending upon your usage during a day. It’s not clear to me if this value is averaged across the whole month when you are on a smart meter: I know it is when you are on one of the old spinning disk meters. I assume it would be calculated on each day, as I think that works out as more expensive for the customer. Diamond Energy appears to have per-month limits, and four different rates, so I just pro-rated them to a 30 day month. I did not bother modelling the fourth rate, as I established fairly early on that they are not going to be close to being the cheapest. I may implement that later, just for completeness.

So, to be able to calculate costs for all retailers, we need to store up to three rates, and the two kWh thresholds that trigger those limits. Note that the second

CREATE TABLE retailer (

name TEXT PRIMARY KEY,

supply_charge NUMERIC NOT NULL,

kWh_rate_1 NUMERIC NOT NULL,

kWh_limit_1 NUMERIC,

kWh_rate_2 NUMERIC,

kWh_limit_2 NUMERIC,

kWh_rate_3 NUMERIC,

pay_on_time_discount NUMERIC,

feed_in_tariff NUMERIC NOT NULL

);

ALTER TABLE retailer

ADD CONSTRAINT rate_2_requires_limit_1

CHECK (kWh_rate_2 IS NULL OR kWh_limit_1 IS NOT NULL),

ADD CONSTRAINT rate_3_requires_limit_2

CHECK (kWh_rate_3 IS NULL OR kWh_limit_2 IS NOT NULL),

ADD CONSTRAINT rate_3_requires_rate_2

CHECK (kWh_rate_3 IS NULL OR kWh_rate_2 IS NOT NULL);

The three check constraints just ensure that we do not accidentally insert a rate where there is no lower tier rate, or no limit in place to apply it from.

Here is a query that inserts values for the retailers for my postcode:

INSERT INTO retailer (

name, supply_charge,

kWh_rate_1, kWh_limit_1,

kWh_rate_2, kWh_limit_2,

kWh_rate_3,

feed_in_tariff,

pay_on_time_discount)

VALUES

('AGL Essentials', 0.8855, 0.3575, NULL, NULL, NULL, NULL, 0.1630, NULL),

('Origin Energy', 0.9033, 0.4002, 4, 0.4272, NULL, NULL, 0.1800, 0.10),

('AGL Solar Savers', 0.8910, 0.4038, NULL, NULL, NULL, NULL, 0.1800, NULL),

('Click Banksia Solar', 0.9966, 0.3941, NULL, NULL, NULL, NULL, 0.1700, NULL),

('Power Direct Residential Saver', 0.8910, 0.4038, NULL, NULL, NULL, NULL, 0.1420, 0.13),

('SA Simply RAA VPP', 0.9863, 0.3600, NULL, NULL, NULL, NULL, 0.1500, NULL),

('Kogan', 0.9566, 0.3154, NULL, NULL, NULL, NULL, 0.0748, NULL),

('PowerShop Shopper Market', 1.1912, 0.3760, NULL, NULL, NULL, NULL, 0.1020, 0.15),

('AGL Residential Essentials', 0.7752, 0.3512, NULL, NULL, NULL, NULL, 0.1420, NULL),

('Diamond Energy Everyday Renewable Saver', 0.9565, 0.3659, 3.33, 0.3948, 11.1, 0.4285, 0.12, NULL),

('Red Energy Living Energy Saver', 0.9790, 0.3641, NULL, NULL, NULL, NULL, 0.1200, NULL),

('Blue NRG DMO - General Usage', 0.7810, 0.3736, 10.96, 0.5775, NULL, NULL, 0.0680, NULL),

('Energy Australia Total Plan Home', 0.8580, 0.4070, NULL, NULL, NULL, NULL, 0.15, NULL),

('Alinta Energy No Fuss (Single Rate)', 0.9130, 0.3317, 3.29, 0.3695, NULL, NULL, 0.0950, NULL),

('Lumo Basic', 1.0120, 0.3539, NULL, NULL, NULL, NULL, 0.15, NULL),

('Powerband Home Flat + Solar FIT', 1.0322, 0.3066, 10.95, 0.3139, NULL, NULL, 0.1150, NULL),

('Origin Energy Econnex', 0.9081, 0.4024, 10.96, 0.4294, NULL, NULL, 0.1000, 0.15)

ON CONFLICT DO NOTHING

;

To calculate the cost for a day, we need to add the daily supply charge, and the amount of import that applies at each rate multiplied by that rate, minus the FIT (feed-in tariff) for the export amount. Because some retailers have a “pay on time discount” (which is really just a late-payment penalty, but that is another blog post waiting to happen), it’s important to note that this discount applies to the whole bill - meaning it needs to be applied after the feed-in tariff has been subtracted.

Here is a function that calculates a daily cost, given a retailer record and import and export values:

CREATE OR REPLACE FUNCTION cost(retailer RETAILER, import INTEGER, export INTEGER)

RETURNS NUMERIC AS $$

SELECT COALESCE(1 - retailer.pay_on_time_discount, 1.0) * (retailer.supply_charge +

-- rate 3

CASE WHEN retailer.kWh_limit_2 IS NULL THEN 0

WHEN import <= retailer.kWh_limit_2 THEN 0

ELSE import - retailer.kWh_limit_2

END / 1000.0 * COALESCE(retailer.kWh_rate_3, 0) +

-- rate 2: hours between limit 1 and limit 2

CASE WHEN retailer.kWh_limit_1 IS NULL THEN 0

WHEN retailer.kWh_limit_2 IS NULL THEN GREATEST(import - retailer.kWh_limit_1, 0)

WHEN import < retailer.kWh_limit_1 THEN 0

ELSE LEAST(import, retailer.kWh_limit_2) - retailer.kWh_limit_1

END / 1000.0 * COALESCE(retailer.kWh_rate_2, 0) +

-- rate 1: up to limit 1

CASE WHEN retailer.kWh_limit_1 IS NULL THEN import

ELSE LEAST(import, retailer.kWh_limit_1)

END / 1000.0 * retailer.kWh_rate_1 -

retailer.feed_in_tariff * export / 1000.0);

$$ LANGUAGE SQL STRICT;

It is a little hard to follow, and if the daily amount was negative, it decreases that value (instead of ignoring the discount), but it will do for now. I would prefer not to choose a retailer that hides a penalty behind a so-called discount anyway, so they would have to be significantly cheaper to attract my custom.

Using this function, we can now calculate the total costs for all retailers, and compare them:

SELECT retailer.name,

'$ ' || lpad(

TRUNC(SUM(cost(retailer, import::INTEGER, export::INTEGER)), 2)::TEXT,

9

) AS cost

FROM daily_summary, retailer

GROUP BY retailer.name

ORDER BY cost ASC;

There is a bunch of code in there to make the output prettier: some of that is lost by the HTML output I have used to generate this table. In an SQL view, those decimal points would all line up…

| name |

cost |

| AGL Essentials |

$ 897.87 |

| AGL Residential Essentials |

$ 916.85 |

| Powerband Home Flat + Solar FIT |

$ 955.59 |

| Lumo Basic |

$ 965.56 |

| Origin Energy |

$ 971.44 |

| SA Simply RAA VPP |

$ 978.81 |

| Power Direct Residential Saver |

$ 979.84 |

| AGL Solar Savers |

$ 997.31 |

| Click Banksia Solar |

$ 1028.43 |

| PowerShop Shopper Market |

$ 1065.24 |

| Kogan |

$ 1075.74 |

| Red Energy Living Energy Saver |

$ 1092.32 |

| Energy Australia Total Plan Home |

$ 1100.55 |

| Origin Energy Econnex |

$ 1155.60 |

| Alinta Energy No Fuss (Single Rate) |

$ 1176.65 |

| Diamond Energy Everyday Renewable Saver |

$ 1302.24 |

| Blue NRG DMO - General Usage |

$ 1929.36 |

(17 rows)

Which brings me to my conclusion: my current retailer is the cheapest for my current generation and consumption patterns.

But we can go further. We can always go further.

We can look at different months, and see if it’s always the case that a given retailer is the best option. Perhaps it is worthwhile shopping around, and switching between retailers at different times of the year.

CREATE OR REPLACE VIEW monthly_cost_comparisons AS

SELECT retailer.name,

to_char(date, 'YYYY-MM') AS month,

SUM(cost(retailer, import::INTEGER, export::INTEGER)) AS cost

FROM daily_summary, retailer

GROUP BY retailer.name, to_char(date, 'YYYY-MM');

That by itself is not that useful, but we can use the crosstab feature to view it in a more readable manner.

CREATE EXTENSION IF NOT EXISTS crosstab;

SELECT *

FROM crosstab(

$$SELECT name, month, '$ ' || lpad(cost::INTEGER::TEXT, 4)

FROM monthly_cost_comparisons ORDER BY name$$,

'SELECT DISTINCT month FROM monthly_summary ORDER BY month'

) AS (

"retailer" TEXT,

"2019-03" TEXT,

"2019-04" TEXT,

"2019-05" TEXT,

"2019-06" TEXT,

"2019-07" TEXT,

"2019-08" TEXT,

"2019-09" TEXT,

"2019-10" TEXT,

"2019-11" TEXT,

"2019-12" TEXT

);

Frustratingly, this function requires enumerating the columns.

| retailer |

2019-03 |

2019-04 |

2019-05 |

2019-06 |

2019-07 |

2019-08 |

2019-09 |

2019-10 |

2019-11 |

2019-12 |

| AGL Essentials |

$ 14 |

$ 41 |

$ 128 |

$ 200 |

$ 209 |

$ 223 |

$ 90 |

$ 1 |

$ 1 |

$ -10 |

| AGL Residential Essentials |

$ 19 |

$ 45 |

$ 127 |

$ 196 |

$ 204 |

$ 219 |

$ 93 |

$ 10 |

$ 9 |

$ -5 |

| AGL Solar Savers |

$ 15 |

$ 45 |

$ 143 |

$ 223 |

$ 233 |

$ 249 |

$ 100 |

$ 1 |

$ 0 |

$ -11 |

| Alinta Energy No Fuss (Single Rate) |

$ 46 |

$ 72 |

$ 147 |

$ 216 |

$ 225 |

$ 242 |

$ 122 |

$ 47 |

$ 46 |

$ 13 |

| Blue NRG DMO - General Usage |

$ 91 |

$ 125 |

$ 226 |

$ 329 |

$ 341 |

$ 371 |

$ 203 |

$ 103 |

$ 102 |

$ 38 |

| Click Banksia Solar |

$ 20 |

$ 50 |

$ 144 |

$ 222 |

$ 232 |

$ 248 |

$ 104 |

$ 8 |

$ 8 |

$ -7 |

| Diamond Energy Everyday Renewable Saver |

$ 47 |

$ 76 |

$ 166 |

$ 246 |

$ 256 |

$ 276 |

$ 135 |

$ 45 |

$ 45 |

$ 11 |

| Energy Australia Total Plan Home |

$ 28 |

$ 57 |

$ 149 |

$ 228 |

$ 237 |

$ 255 |

$ 112 |

$ 19 |

$ 19 |

$ -2 |

| Kogan |

$ 47 |

$ 69 |

$ 132 |

$ 191 |

$ 198 |

$ 213 |

$ 112 |

$ 49 |

$ 49 |

$ 16 |

| Lumo Basic |

$ 22 |

$ 49 |

$ 134 |

$ 203 |

$ 212 |

$ 227 |

$ 98 |

$ 12 |

$ 12 |

$ -4 |

| Origin Energy Econnex |

$ 46 |

$ 71 |

$ 143 |

$ 211 |

$ 219 |

$ 236 |

$ 120 |

$ 48 |

$ 47 |

$ 14 |

| Origin Energy |

$ 17 |

$ 45 |

$ 136 |

$ 213 |

$ 222 |

$ 238 |

$ 98 |

$ 5 |

$ 5 |

$ -8 |

| Power Direct Residential Saver |

$ 27 |

$ 52 |

$ 131 |

$ 198 |

$ 207 |

$ 222 |

$ 100 |

$ 21 |

$ 21 |

$ 1 |

| PowerShop Shopper Market |

$ 44 |

$ 67 |

$ 133 |

$ 193 |

$ 201 |

$ 215 |

$ 111 |

$ 44 |

$ 44 |

$ 13 |

| Powerband Home Flat + Solar FIT |

$ 31 |

$ 55 |

$ 126 |

$ 187 |

$ 195 |

$ 208 |

$ 98 |

$ 26 |

$ 26 |

$ 4 |

| Red Energy Living Energy Saver |

$ 36 |

$ 63 |

$ 142 |

$ 212 |

$ 221 |

$ 237 |

$ 112 |

$ 32 |

$ 32 |

$ 6 |

| SA Simply RAA VPP |

$ 23 |

$ 50 |

$ 135 |

$ 206 |

$ 215 |

$ 230 |

$ 99 |

$ 13 |

$ 13 |

$ -4 |

From this, I can see that nothing is really going to save me more than a few bucks a month: and this does not take into account the $200 “bonus” I get from AGL if I stick with them for a year (or something like that).

I’d also like to look at actual billing periods, because then I can see that everything matches up to what my actual bills are (and then I can add in the bonuses at the times they applied).

My billing periods went to monthly on a specific date, so from then on they are regular one-month periods, but prior to that they were a little all over the place:

CREATE OR REPLACE VIEW billing_periods AS

SELECT 'AGL Essentials'::TEXT AS retailer,

DATERANGE(start::DATE, (start + INTERVAL '1 month')::DATE) as period

FROM generate_series('2019-06-24'::DATE, now()::DATE, INTERVAL '1 month') start

UNION ALL

VALUES ('AGL Essentials'::TEXT, '[2019-03-07,2019-06-01)'::DATERANGE),

('AGL Essentials', '[2019-06-01,2019-06-24)')

ORDER BY period DESC;

We can model the retailer bonuses in a way that only applies them when they apply, and if we are calculating the costs for that retailer:

CREATE TABLE retailer_bonus (

retailer TEXT,

billing_period DATERANGE,

bonus NUMERIC

);

INSERT INTO retailer_bonus(retailer, billing_period, bonus)

VALUES ('AGL Essentials', '[2019-08-24,2019-09-24)', 25);

And now some views for calculating the actual costs for each day, and then aggregating within the billing periods (and a pretty version).

CREATE OR REPLACE VIEW actual_costs AS

SELECT retailer,

billing_periods.period,

daily_summary.date,

cost(_ret, import::INTEGER, export::INTEGER),

generation

FROM billing_periods

INNER JOIN retailer _ret ON (_ret.name = billing_periods.retailer)

INNER JOIN daily_summary ON (daily_summary.date <@ billing_periods.period);

CREATE OR REPLACE VIEW billing_period_costs AS

SELECT retailer,

period,

SUM(cost) - COALESCE((SELECT SUM(bonus)

FROM retailer_bonus

WHERE billing_period = period

AND retailer_bonus.retailer = retailer.name), 0) AS cost,

SUM(generation) AS generation

FROM actual_costs

GROUP BY retailer, period

ORDER BY period DESC;

CREATE OR REPLACE VIEW billing_period_costs_pretty AS

SELECT retailer,

period,

'$ ' || LPAD(TRUNC(cost, 2)::TEXT, 7, ' ') AS cost,

LPAD(TRUNC(generation::NUMERIC / 1000, 1)::TEXT, 6, ' ') || ' kWh' AS generation

FROM billing_period_costs

ORDER BY period DESC;

And now, we can look at the actual bills, with generation for that period:

SELECT * FROM billing_period_costs_pretty;

| retailer |

period |

cost |

generation |

| AGL Essentials |

[2019-11-24,2019-12-24) |

$ -9.50 |

595.4 kWh |

| AGL Essentials |

[2019-10-24,2019-11-24) |

$ -1.20 |

879.0 kWh |

| AGL Essentials |

[2019-09-24,2019-10-24) |

$ 12.80 |

757.3 kWh |

| AGL Essentials |

[2019-08-24,2019-09-24) |

$ 104.30 |

610.6 kWh |

| AGL Essentials |

[2019-07-24,2019-08-24) |

$ 224.27 |

374.2 kWh |

| AGL Essentials |

[2019-06-24,2019-07-24) |

$ 210.24 |

302.8 kWh |

| AGL Essentials |

[2019-06-01,2019-06-24) |

$ 148.31 |

244.8 kWh |

| AGL Essentials |

[2019-03-07,2019-06-01) |

$ 183.65 |

1495.9 kWh |

Note that the first row is the current month: it’s only partway through, so I will end up with even more of a credit than that, with any luck.

These values are all within a dollar or two of the amounts I was actually billed, so that is nice.

But wait, there is more!

We can use this, with just a couple more functions, to make fairly good estimates of what our outputs and costs would be if we had more panels.

But that one will have to wait for another day.